The London Market Conference 2025 unfolded against the backdrop of a softening market cycle - an environment that many speakers argued should act as a catalyst for innovation, rather than a constraint.

Delegated authority, MGAs, new product development and broker facilities all featured heavily in the day’s conversations.

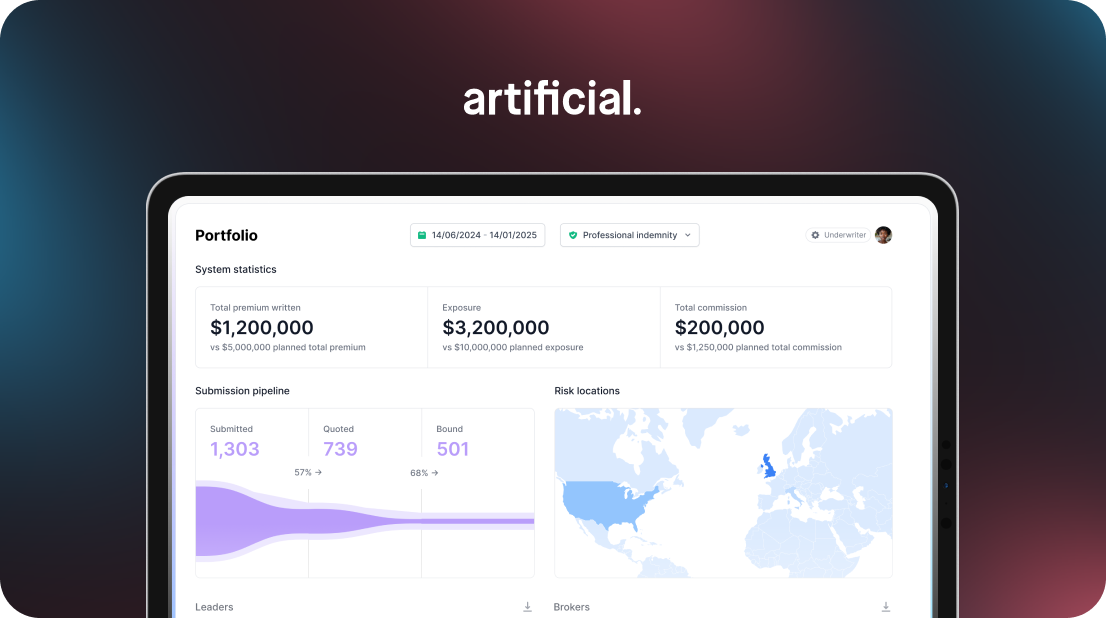

In his keynote on AI in underwriting, Artificial Co-CEO David King argued that addressing the London Market's data problem is the only way to unlock the true potential of AI in specialty underwriting. While existing models offer agility and cost-efficiency, their success hinges on structured, transparent and auditable data.

David’s talk centred on three pivotal themes: the current state of AI in underwriting, the barriers holding it back, and the practical steps carriers and brokers can take to move forward.

The challenge: constrained by data and process

AI is already reshaping how underwriters triage risks, flag inconsistencies, and manage documentation. It is speeding up processes that have historically slowed the market down. And yet, tet these gains are only sustainable where robust audit trails and codified decision-making are in place.

Even the most sophisticated AI is constrained when fed incomplete or unstructured data. Underwriters receive as much as 80% of data in unstructured formats from brokers, such as emails, spreadsheets, and PDFs.

This forces underwriters to spend too much time sorting and performing repetitive manual data entry, creating bottlenecks and limiting the ability to make portfolio-level decisions.

The second major constraint is the lack of robust audit trails. AI's true potential is only unlocked when integrated with platforms that have clearly codified processes and a structured, interrogable record of how decisions are made.

Without this foundation, AI cannot operate at scale, deliver consistent outcomes, or provide the transparency that regulators and governance teams increasingly expect.

This also applies to the growing momentum behind smart follow vehicles. The consensus at the conference was stark, in that those without scalable follow capabilities risk falling behind, with ‘slow follow’ models potentially phased out entirely within the current cycle.

Similarly, the call on some panels for more proactive participation in consortia highlighted the need for better integration between underwriting capital and data-led decision infrastructure.

What AI enables for underwriters today

Despite these challenges, AI is already transforming daily tasks for underwriters, with a 2025 LMA survey finding that as many as 40% are already using the technology. The primary use case is data extraction, with 74% of underwriters seeing the main benefit of AI to be data extraction from unstructured documents.

When harnessed correctly, AI offers powerful capabilities to underwriters:

Data ingestion and enrichment: Large Language Models (LLMs) excel at parsing and structuring data from documents like emails, spreadsheets, and PDFs. AI can automatically flag missing fields or inconsistencies and enrich data using internal models or external data sources.

Agentic AI for communication and manual tasks: The emergence of ‘underwriter agents’ and ‘broker agents’ allows AI to communicate and complete tasks that previously required manual intervention, such as follow-ups, triage submissions, and even generating preliminary quotes.

Contract certainty and generation: AI can automatically compare contract wordings and flag changes or non-standard clauses, which speeds up the review process and reduces compliance risk. Tools like Artificial’s Contract Builder can draft MRCv3-compliant contracts by pulling structured data directly from submissions, turning an error-prone task into a fast, auditable process.

These capabilities do not just improve efficiency; they shift the underwriter’s role from an administrator to a portfolio strategist.

Overcoming the data problem

AI’s greatest value lies in re-imagining how insurance operates and delivers value to the insured.

But without structured data and robust audit trails, AI can't be safely scaled. It can’t deliver consistent decisions, nor can it meet the growing expectations of regulators and governance teams. This is where brokers play a critical role.

Data quality and availability issues are cited as the number one barrier to the adoption of AI within underwriting operations by 49% of LMA members surveyed. To overcome this, the market needs a unified approach that focuses on three areas:

1. Receiving structured data from brokers

The theme of AI connected with many of the day’s wider conversations around broking. Broker facilities, often cited as ‘flavour of the month’, are here to stay, especially as carriers view them as a low-cost growth model. But for facilities to deliver on their promise at scale, they must be underpinned by structured, high-quality data and shared standards across the placement chain.

Brokers hold the key to structured, high-quality submission data. Transformation is only possible by bringing brokers along, as they are the ones holding the keys to the data kingdom.

But to achieve real transformation, brokers and underwriters must align around a single source of truth, from quote to bind. At Artificial, our Smart Placement and Smart Underwriting platforms tackle this challenge by providing a seamless flow of information across the risk’s journey.

2. Consistent decision frameworks and audit trails

AI needs more than just data. It also needs structure around how decisions are made. A consistent, codified framework allows every action to be explained and every recommendation to be traced back to its source.

This is what makes AI accountable. With structured audit trails in place, underwriters can operate with confidence and regulators can see exactly how decisions have been made. Without this kind of framework, the use of AI becomes limited and difficult to govern.

3. Shared standards between brokers and carriers

For structured data to be truly effective, everyone needs to be working from the same definitions. When brokers and carriers use shared formats and agreed field structures, it becomes possible to automate more reliably and act on insights more quickly.

This is already happening in parts of the market. Initiatives like the Lloyd’s Core Data Record and ACORD standards are helping to set expectations. But meaningful change depends on widespread adoption.

With shared standards in place, brokers and underwriters can move faster, reduce rework, and ensure the data they rely on is fit for purpose. Without them, efforts to scale AI will continue to face unnecessary friction.

A blend of experience and expertise

The future of underwriting is an underwriter with the best of both worlds: technology and human expertise.

A soft market increases pressure on operational efficiency, and many carriers are now looking to codify appetite, automate low-value tasks, and improve auditability. But while technology plays a pivotal role, the market’s long-term strength still rests on human expertise.

What emerged clearly throughout the London Market Conference was that real innovation doesn’t happen in isolation. As Lloyd’s CEO Patrick Tiernan put it in his keynote speech, the future belongs to those who embrace ‘total innovation’, with a blend of capital models, product development, digital tooling and shared data executed fluidly across the market.

In the near future, AI will not only ingest and triage, it will help underwriters actively shape portfolios by identifying patterns, surfacing emerging risks, and flagging new opportunities. Complex decision-making will remain human. The value lies in augmentation, not automation for its own sake.

As a market, we can't get there if we don't have sufficient data standards, robust compliance and a shared data framework. Structured data and transparent digital infrastructure will define who adapts, and who gets left behind.

Will the market embrace the change?

Get in touch with our experts today to implement smart placement and underwriting in your organisation.